Probability of AI-Caused Disaster

Investigating time and severity of AI catastrophes

This post presents a summary and comparison of predictions from Manifold and Metaculus to investigate how likely AI-caused disasters are, with focus on potential severity. I will explore the probability of specific incidents—like IP theft or rogue AI incidents—in a future post.

This will be a recurring reminder:

Check the most resent probability estimates in the embedded web pages, instead of looking at my At The Time Of Writing (ATTOW) numbers.

If you know of a market that I have missed, or if there is a question you think is relevant but is missing from this analysis, please mention it in a comment or message me!

Please go to the forecasting platform mentioned in the post and make your own predictions!

Without further delay, we shall begin investigating the probability of AI disasters, from smaller disasters to full-scale catastrophes.

Disasters, from small to large

The smallest severity of disasters considered in this post concerns incidents resulting in $1b of losses or 100 lost lives. These are Manifold’s probability estimates for such a disaster:

According to the resolution criteria, this resolves to Yes if “AI actions are directly causing an incident, accident or catastrophe resulting in $1 billion of damages. If the event causes loss of life, each death counts as $10 million of damages.” Also, only direct damage count for this question. Reputation damage causing a decrease in stock value does for example not count. Additionally, the AI is required to be “sufficiently advanced” and a “narrow-purpose ML model”, as judged by the question creator.

The AI also needs to be involved in the decisions leading to the disaster. Simply helping a human to, for instance, develop new weapons while not being involved in the decision making would not count.

There are similar questions on Metaculus, asking specifically about an AI malfunction causing loss of lives or economic damage before 2032:

For these two questions, the criteria specify events that “involve an AI system behaving unexpectedly”. A malfunction is required, it can’t involve a human giving the AI orders to cause a disaster. It is also required that the disaster wasn’t caused by human negligence, such as failing to properly monitor AI behavior. Taking the current probability for the first question (80%) and subtracting the probability for the second (25%), we can estimate a 55% probability (80% - 25% = 55%) for a disaster causing between 100 and 1000 deaths, or between $1B and $200B 2021 USD in damage.

On Manifold there is a series of questions for predicting the size of an AI caused catastrophe before 2029, created by the user Scrooge McDuck. For these questions to resolve to Yes, the AI must act maliciously, recklessly, or with poor judgement, similar to the two Metaculus questions above. If the AI follows orders from an authorized human, it doesn’t count. It also has to be a single event, not a series of smaller disasters.

Except for the question “Does an AI disaster kill at least 100,000 people before 2029?”, which 28 unique forecasters have bet on ATTOW, the other markets have not received much attention yet. Since they currently have below 15 unique traders, you should take the predictions with an appropriate grain of salt. For instance, the probability of AI killing at least 10,000 people (estimated to 56%) cannot be larger than the probability of killing at least 1,000 people (estimated to 55%). The predictions are contradictory ATTOW.

The estimation for the probability of an AI disaster killing 100,000 people has been relatively stable over the last few months ATTOW:

Manifold also has a question for a disaster involving “at least 1,000,000 deaths or US $100 billion in damage directly attributable to AI”, with an estimated 32% probability of occurring before 2030:

Unlike the previous questions, it is enough if AI capabilities are used to cause the disaster. The disaster can be caused by the AI’s decisions, but it could also be caused by AI misuse or use in conflict, for example. And unlike the question “Does an AI disaster kill at least 100,000 people before 2029?”, economic damage can also result in a Yes resolution. These two differences are likely the reason for the higher estimated probability despite this question asking about 1,000,000 lost lives instead of 100,000. The forecasters might expect a high probability of AI being used maliciously or causing economic damage but not directly kill that many people.

This market asks instead about a disaster causing $1T dollars of damage before 2070 (44% ATTOW):

The higher probability of disaster compared to the previous question, which considered $100 billion in damage or 1,000,000 deaths, indicates that the forecasting community places relatively high probability in a large disaster happening after 2030.

Extreme disasters

Now we shift focus to the really extreme disasters, causing the death of at least 10% of the world population. For this we can use some questions from the Metaculus question series called the Ragnarök Question Series, which investigates how likely an extreme disaster or extinction event is and what might cause it. The predictions for the question series are visualized here.

First off, how likely does the Metaculus community think that an extreme disaster this century is? The question below operationalizes this with a straightforward criterion: “This question will resolve as Yes if the human population (on Earth, and possibly elsewhere) decreases by at least 10% in any period of 5 years or less.” This definition for ‘catastrophe’ or ‘extreme disaster’ is used for all questions in this section.

There is some disagreement between forecasters on this question, the lower and upper quantiles for individual predictions are at 14% and 52% respectively, ATTOW, with median at 33%.

Conditional on such a catastrophe occurring, this question asks if it is likely to be caused by an AI failure-mode, defined as a catastrophe “resulting principally from the deployment of some artificial intelligence system(s)”:

The forecasters are disagreeing even more on this question than the last. The lower and upper quartiles for individual predictions are 15% and 75% respectively. Using the estimation of the probability of extreme disaster, decreasing world population by at least 10%, we can estimate the probability of AI causing such a disaster as

P(Extreme AI Disaster)

= P(Extreme AI Disaster | Extreme Disaster) x P(Extreme Disaster)

= 0.4 x 0.33 = 0.132

Summarily, the two questions above estimates a 13.2% probability of an AI caused extreme disaster this century, but with very high uncertainty.

Assuming that such a catastrophe happens, this question asks when:

The median seems to have stabilized between 2035 and 2037 for over a year ATTOW, which might partly be because there have not been a high number of new predictions during this period.

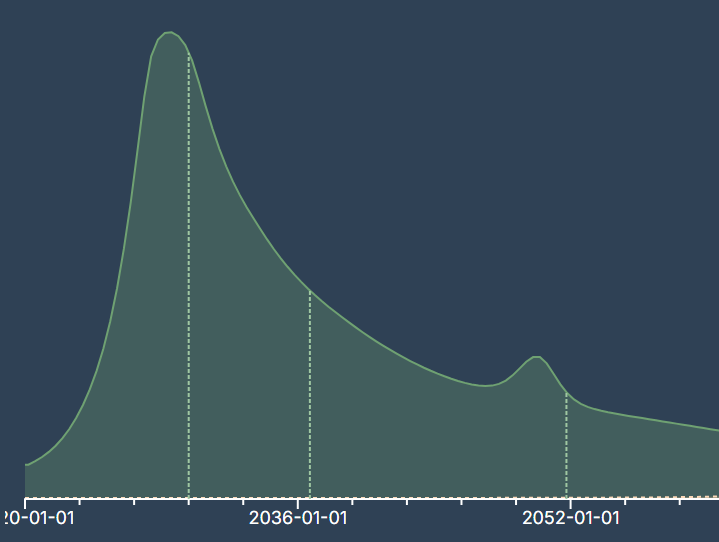

For this type of question, the estimated probability density function is much more relevant than the median. For instance, the community seems to think that the specific years that such a catastrophe is most likely to happen are the years 2027 to 2030 ATTOW, as indicated by this image of the density function:

Before we examine extinction probability, we take a look at this question:

If AI causes an extreme disaster, will the disaster wipe out most of humanity? Metaculus estimates 50% probability ATTOW, but again there is high disagreement on the probability, with lower and higher quantiles at 15% and 87.5% respectively for individual predictions.

Extinction

“Will humanity be wiped out?” is a type of question where you can never win by betting Yes on a prediction market; if humanity is wiped out you will not exist. This means that traders are incentivized to bet on No, since they at least have a chance to make a profit that way. This is true regardless of whether the market uses play money (like Manifold’s mana) or real money (like Manifold’s sweepcash which is exchangeable for USD). To circumvent this problem, Eliezer Yudkowsky created a question that will resolve before it is actually answered:

When the question resolves to N/A (assuming we survive at least till 2027), all who have traded on the market get their mana back. However, “any profit or loss you make on this market will be reflected in your current wealth”. In practice, this means that forecasters betting on this question is betting on how the prediction will change over time. If they bet on Yes, and the estimated probability increases before 2027, they can cash out the profit before the market’s resolution.

Despite the odd trading incentives for forecasting existential threats, more Manifold forecasters are asking about it. Here are a few highly similar questions, with associated probability estimates and number of unique traders that have made bets on each market:

Martin Randall: Will AI wipe out humanity before the year 2030? - 5%, 1.1k traders

Tripping: Will AI wipe out humanity before the year 2040? - 7%, 453 traders

James Bills: Will AI wipe out humanity before the year 2060? - 12%, 198 traders

MNK19: Will AI wipe out humanity before the year 2080? - 13%, 22 traders

James Dillard: Will AI wipe out humanity before the year 2100? - 13%, 796 traders

Timothy Currie: Will AI wipe out humanity before the year 2150? - 12%, 45 traders

Nick Allen: Will AI wipe out humanity before the year 2600? - 45%, 10 traders

Micael Wheatley: When (if ever) will AI cause human extinction? - 8 traders

There is a notable difference in probability estimates regarding the question of AI wiping out humanity before 2030: the estimate for Yudkowsky’s question is 13% ATTOW and the estimate for Martin Randall’s question is 5%. This could partly be explained by the incentives to trade No.

Yudkowsky is an AI safety researcher and one of the pioneers of the AI safety community, and think AI-caused extinction is highly likely. To read more about his reasons for believing this, I recommend this article: AGI Ruin: A List of Lethalities.

Perhaps people that are sympathetic to his views are more likely to find his question than Martin Randall’s question, resulting in the observed difference. But even if this is the case, it is hard to say which probability estimate is more accurate. It could be the case that a larger fraction of traders on Yudkowsky’s question are part of the AI safety community, and therefore more informed about risks, and able to make better predictions.

The probability estimates for how probable it is that AI wipe out humanity before 2060, 2080, 2100, and 2150 are all between 12% and 13%, indicating that the forecasters don’t think that AI caused disaster is likely to happen between 2060 and 2150. Nick Allen has posted the question for the year 2200, 2300, etc. up to the year 3000, but few traders have traded on those questions. Traders have little incentive to trade on questions that are not going to be resolve anytime soon, since it takes longer to make a profit. Metaculus doesn’t have this disadvantage since it is not a prediction market.

Now let’s look at a few relevant Metaculus questions, starting with this one:

The fine print of the resolution criteria specifies “events that would not have occurred or would have counterfactually been extremely unlikely to occur “but for” the substantial involvement of AI within one year prior to the event.” — if the event doesn’t require AI to occur, it doesn’t count.

If the population is actually reduced to below 5000 individuals, the probability of extinction seems really high. The minimum size for the population of a species to survive in the wild is called minimum viable population (MVP). This meta-analysis reports a median 4,169 individuals for published estimates of MVP across species. However, humans may be able to avoid genetic drift and inbreeding issues by modifying their DNA, so if the few surviving members of humanity somehow has access to such DNA-altering technology they might at least theoretically be able to avoid complete extinction.

To further analyse how likely the question above is, the Forecasting Research Institute has also posted conditional questions—questions for estimating the probability of the scenario depending on whether specific criteria are met or unmet. These come from their study “Conditional Trees: A Method for Generating Informative Questions about Complex Topics”. Unfortunately, these conditional questions have not received much attention on Metaculus yet, but since they are highly relevant, I have listed them here:

Conditional on an AI-caused administrative disempowerment before 2030

Conditional on an AI system being shut down due to exhibiting power-seeking behavior

Conditional on deep learning revenue doubling every two years between 2023 and 2030

Conditional on an insufficient policy respons to AI killing more than 1 million people

Here is a question for when the disaster might occur:

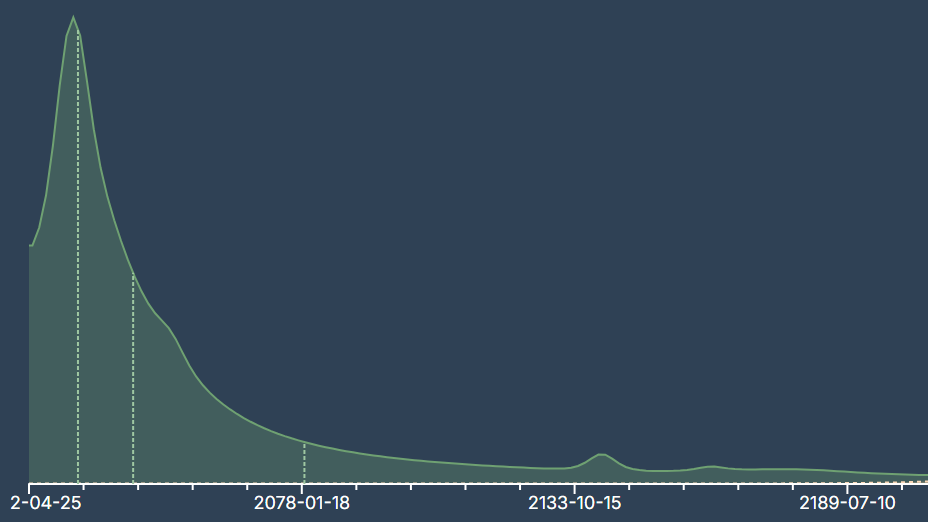

As noted earlier, we need to look at the density function, which currently attains its maximum value at the mid of year 2031:

So, we have an estimate of when extinction by AI occurs, if it occurs. We can also ask about the probability that AI caused human extinction, conditioned on humans being wiped out:

Only 15 unique traders have made trades on this question so far.

Relation to AGI and Superintelligence

There are a couple of questions that relates existential risk to AGI and artificial superintelligence. First, we have this question, relating risk level to AGI arrival time:

To determine that AGI has arrived, this Metaculus question is used: When will the first general AI system be devised, tested, and publicly announced? But as I have discussed in a previous post, I think this question can only provide a rough estimate of AGI arrival time, since the resolution criteria for the AI system does not exactly match what most would call AGI.

According to the Metaculus community, the sooner we meet the criteria for a "general AI system," the higher the existential risk. Currently, they estimate a 50% risk if it happens between 2025 and 2030, 29% between 2030 and 2040, 12% between 2040 and 2060, and 8% between 2060 and 2100. If this is compared to estimated arrival time of AGI, as is explored in this post, this results in quite high existential risk estimates. The Metaculus and Manifold forecasters give an estimate of around 50% or higher probability of AGI arriving before 2030, depending slightly on the definition used for AGI.

The following question uses the same “general AI system” question to determine the arrival time of AGI, and asks whether or not we will be extinct five years after:

The current probability estimate is 7%, notably higher than the 2% estimate for the human population falling below 5000 individuals.

This Manifold question imitates Yudkowsky’s approach and resolves before the date that it asks about:

The conditional probability of human extinction given the development of artificial superintelligence (ASI) by 2030 is currently estimated at 32%—significantly higher than most probability estimates for AI-caused extinction on Manifold that were listed earlier. This indicates that earlier development of ASI is perceived to drastically amplify risks, similar to the higher extinction risk estimates for earlier arrival of AGI.

Conclusion

Individual forecasters have wildly varying predictions on questions like “If a global catastrophe occurs, will it be due to an artificial intelligence failure-mode?” and “If an artificial intelligence catastrophe occurs, will it reduce the human population by 95% or more?”.

The estimated probability for AI wiping out humanity before 2030 ranges from 13% to 5%, and if AGI timeline predictions are considered in combination for the risk estimate conditioned on AGI arrival time, we get an even higher probability estimate.

These disagreements and contradictory predictions indicate a difficulty in forecasting extreme events, and it is hard to determine what predictions, if any, are accurate. However, there are important things we can learn from these questions anyway. First, if a prediction changes significantly, this implies that something important has occurred. If a treaty is signed, and the catastrophe risk estimates decrease (either directly after the signation, or beforehand if the signation is expected by the forecasters), this is a good sign that the treaty actually helps for reducing risk.

Additionally, the estimated probabilities for extreme disasters or extinction are not zero. While the exact estimates vary wildly, humanity has to deal with risks of global catastrophes this century, especially from AI. Even if the lowest of estimates are assumed to be true, like 2% probability for AI reducing global population below 5000 individuals, it’s unacceptably high. Would you consider boarding a plane with a 1/50 chance of crashing? With your entire family, and everyone you know? If you knew that the world was facing such a risk, or potentially much higher, would you do nothing to prevent it?

Some additional thoughts

I think simply forecasting the damage caused by AI disasters could be difficult compared to forecasting specific incidents and disasters. We might get better predictions on the questions considered in this post by reasoning about things like cybersecurity issues, rogue AI systems, dangerous capabilities, use of AI in conflict (e.g. for weapon development or autonomous weapons), AIs being used by malicious actors, etc. I hope to revisit the predictions in this post when I have investigated this further.

If AI causes a disaster, it could trigger a heavy regulatory response, or even efforts to increase international coordination, having major AI risk implications. Although this seems like a dark line of reasoning, a smaller disaster could potentially spur people into action to avoid even larger catastrophes of occurring. I plan to devote a future post to explore potential reactions to disasters in more detail.

While I feel very uncertain about the probability of AI incidents, I suspect that we will see a gradual increase in severity. Hard regulations regarding things like cybersecurity and restrictions on openly releasing AI weights might prevent any notable incidents until AIs are capable enough to circumvent safety procedures. An AI capable enough to do that would probably be able to cause quite large disasters. But, since those regulations don’t exist yet, I expect AIs to get stolen, leaked or simply released on the internet, which would allow for malicious or careless actors to cause incidents until access to advanced AIs is restricted (if it is ever restricted), and those incidents will increase in size when smarter AIs are made available.

Thanks for reading!

A 74% chance of 100 people dying from AI before 2029 isn't as scary as it sounds if there's millions of people using the technology and there are many isolated incidents over time that add up to 100 (or more). A single AI related incident where 100 people die in a short span of time hits much different psychologically. Maybe the prediction should have differentiated.