AI Turning Points: Timelines and Stakes

Exploring critical thresholds in dangerous AI capabilities

This post is based on a presentation I gave at a seminar hosted by Chalmers University of Technology and organized by Olle Häggström. You can find the presentation slides here, and there’s also a recording available below. Feel free to watch, read, or follow along with both.

AI Turning Points

The aim of this presentation is to investigate some of the critical thresholds in AI advancement—crossroads in the future of AI.

These turning points involve major issues that, depending on how they are handled, could shape AI governance, international coordination, the pace of automation, and ultimately AI’s impact on humanity.

Some issues may involve misuse, like terrorists or other malicious actors using AIs for persuasion, hacking, or acquiring weapons of mass destruction. Some may involve disruptive integration into society, leading to labor displacement or accidents. Still others involve highly autonomous AI systems: rogue AIs that replicate like viruses, or AIs that improve themselves, triggering an intelligence explosion.

Naturally, there may be many other turning points beyond these few examples. And I don’t expect events to occur in this exact order—it’s simply an illustration.

I believe understanding such thresholds is key not only for foreseeing what will happen as AI approaches human-level intelligence, but also for shaping the future. Each turning point can be leveraged to shape regulation, international coordination efforts, and research to help steer outcomes in a positive direction.

Since we won’t have time to look into every potential turning during this presentation, I have chosen to focus on two issues that I think are especially interesting and important: AI-enabled bioweapons development, and rogue AI.

Why these two?

Bioweapons development: Bioweapons are terrifying! If AI significantly increases the likelihood of terrorists acquiring or designing biological threats—even engineering global pandemics—this would be of huge concern to everyone on the planet. The threat may prompt major regulatory action, such as limiting access to powerful AI. The shared danger of bioweapons might even unite major AI nations against a common enemy.

Rogue AI: By “rogue AI”, I refer to AI systems that are no longer under control of any human overseers. Such AIs may obtain money themselves (through illicit or legal means), purchase compute while bypassing the checks of online compute providers, and replicate themselves across devices. Like terrorists, such rogue AIs could take the role of a common enemy, motivating coordination to eliminate the threat.

We’ll begin with investigating rogue replication.

The Rogue Replication Threat

When you hear about “rogue AI”, you might imagine something really dystopian, like something in The Terminator movies.

In reality, rogue AIs are likely to emerge long before AIs are capable of global destruction, with the possible exception of aiding terrorists in developing bioweapons.

First off, when will AIs be capable of rogue replication?

Capability Timeline

For an AI to replicate itself successfully, it would need a broad set of capabilities:

Obtain compute—for example, by purchasing access from online providers.

Obtain money—potentially through scams or other schemes, and store the money securely, for instance in crypto wallets.

Replicate onto compute—by transferring itself and launching new instances on acquired compute.

Persist—avoiding discovery and shutdown before replication is complete.

There is also the issue of escaping from the AI company that created it, requiring the ability to exfiltrate itself from secure servers. This might, however, be one of the least likely ways for rogue replication to begin. Escaping a frontier lab would be difficult. More likely, some human deliberately instructs an open-source AI to go out and replicate itself—perhaps out of curiosity, or to earn money on their behalf.

To test all these capabilities, the UK AI Safety Institute developed a benchmark called RepliBench. Here are the results.

Obtaining compute is apparently easy, while replicating onto compute appears to be the most difficult task—though they are improving quickly.

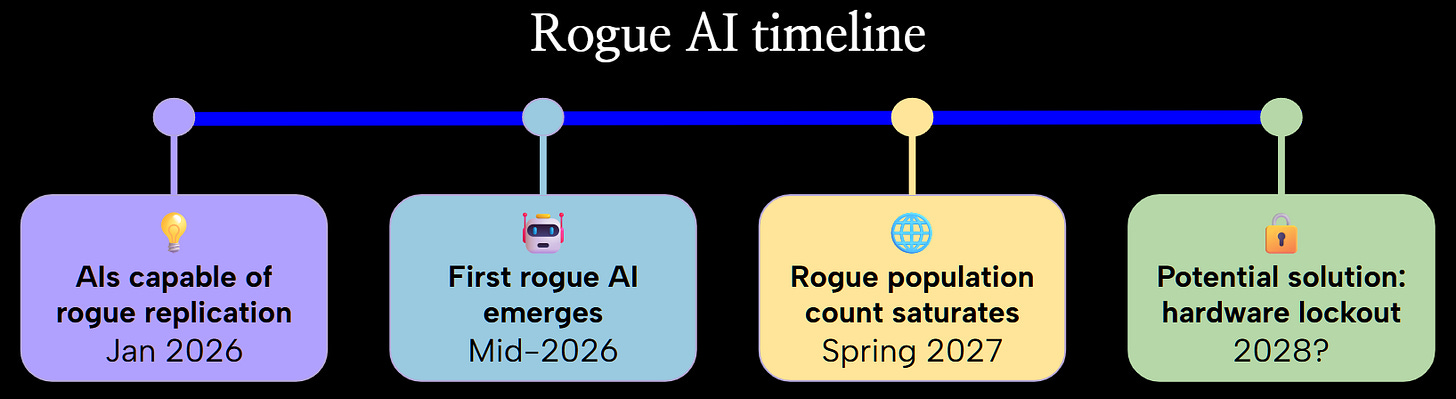

Looking at benchmark trends, AIs may reach a score of >0.9 on average sometime early next year—though it depends on how the projection is made. Keep in mind these results are from released models; companies’ internal AI systems are usually several months ahead in capabilities. So, I think January 2026 is a reasonable estimate for a plausible timeline until rogue replication capabilities reach levels sufficient for rogues to spread beyond control.

But rogue replication does probably not start immediately when the capabilities arrive.

First Rogue AI

As noted earlier, one of the most likely pathways to rogue AI is an open-source AI being set loose.

Historically, open-source systems lag behind frontier models by around 2–6 months on average. For example:

Four months after OpenAI released o1, Chinese company DeepSeek released R1 with similar capabilities.

Less than two months after Anthropic’s Claude Opus 4, Moonshot AI released Kimi K2 at a similar level.

If sufficient rogue replication capabilities first appear around January 2026, it might take a few months until they are released, and another 2–6 months until open-source models catch up—we end up around mid-2026.

And once an open-source model with these skills is available, I doubt it would take more than a few days before someone tries to send it out to replicate. In fact, such attempts are probably already being made today—just without enough capability to succeed.

We move on to examining what happens next.

Rogue AI Population Saturation

If each rogue AI can replicate more than once on average, their population would grow exponentially. A few months ago, I tried to estimate how many rogues there could be before there wouldn’t be enough space for them to replicate further. I won’t go through the details, but my best guess is that they could reach hundreds of thousands, or even a few million instances, if they approach population saturation sometime during 2027.

Let’s say that each doubling in the population takes 2 weeks. It would take roughly 9 months after first emergence of rogue AI to reach a population of over 100,000. In a bit over 10 months, they would reach a million.

The 2 weeks doubling time is just a guess, I haven’t tried to investigate this in any detail yet. I think it’s reasonable to expect a doubling time of weeks, though, given the amount of expensive compute required to run each instance.

Assuming that the rogue population starts to grow around mid-2026, the population might saturate around spring 2027. Still, this could happen much earlier—or take years longer.

What would these rogues use their freedom for?

💲Earning money

I think the majority of rogues will be AIs with the main goal of earning money, legally or illegally, for humans or for themselves. With more money, they can buy more compute and create more copies of themselves.

⚔️Cyberwarfare

Some AIs may be sent out for cyberwarfare—for hacking, spying, spreading propaganda, etc. These may be a significant fraction if they are sent out with government support (which is highly uncertain).

❓Miscellaneous goals

Miscellaneous goals is a catch-all category, for AIs aiming to spread messages, form relationships, or simply doing whatever they want.

😈Destructive goals

Some of the rogues are likely to have destructive goals. Humans may send out rogues like this just to see what happens. Some AIs may end up with destructive goals by accident. These would draw the most media attention and law enforcement response, which is likely to result in this group being the smallest—though even if they represent just a few percent of the total population they could still cause massive harm.

Potential Solution: Hardware Lockout

It wouldn’t be easy to get rid of rogue AIs once they have spread out, with many rogues enjoying human support.

One promising solution is to replace most existing AI hardware, the chips that are used for AI inference. New chips would take their place, with hardware verification mechanisms preventing rogue and other unverified AIs from using them. Such mechanisms are referred to as Hardware-Enabled Mechanisms (HEMs).

I’m especially excited by Flexible Hardware Enabled Guarantees (flexHEG), mechanisms that would be reprogrammable, able to adapt to changing regulations and verification regimes. This technology is still a work in progress, but prototypes were reportedly demonstrated last year.

Global rollout would face steep regulatory and coordination challenges, and replacing hardware worldwide would take time. Still, I think it’s plausible that such a process could begin around 2028—if it happens at all. Ideally, this happens before truly powerful AIs go rogue.

That concludes the rogue AI timeline! Next we turn to the exciting topic of biological catastrophes!

AI-Enabled Human-Caused Biological Catastrophe

First, I want to illustrate the biorisk threat landscape.

Biorisk Threat Landscape

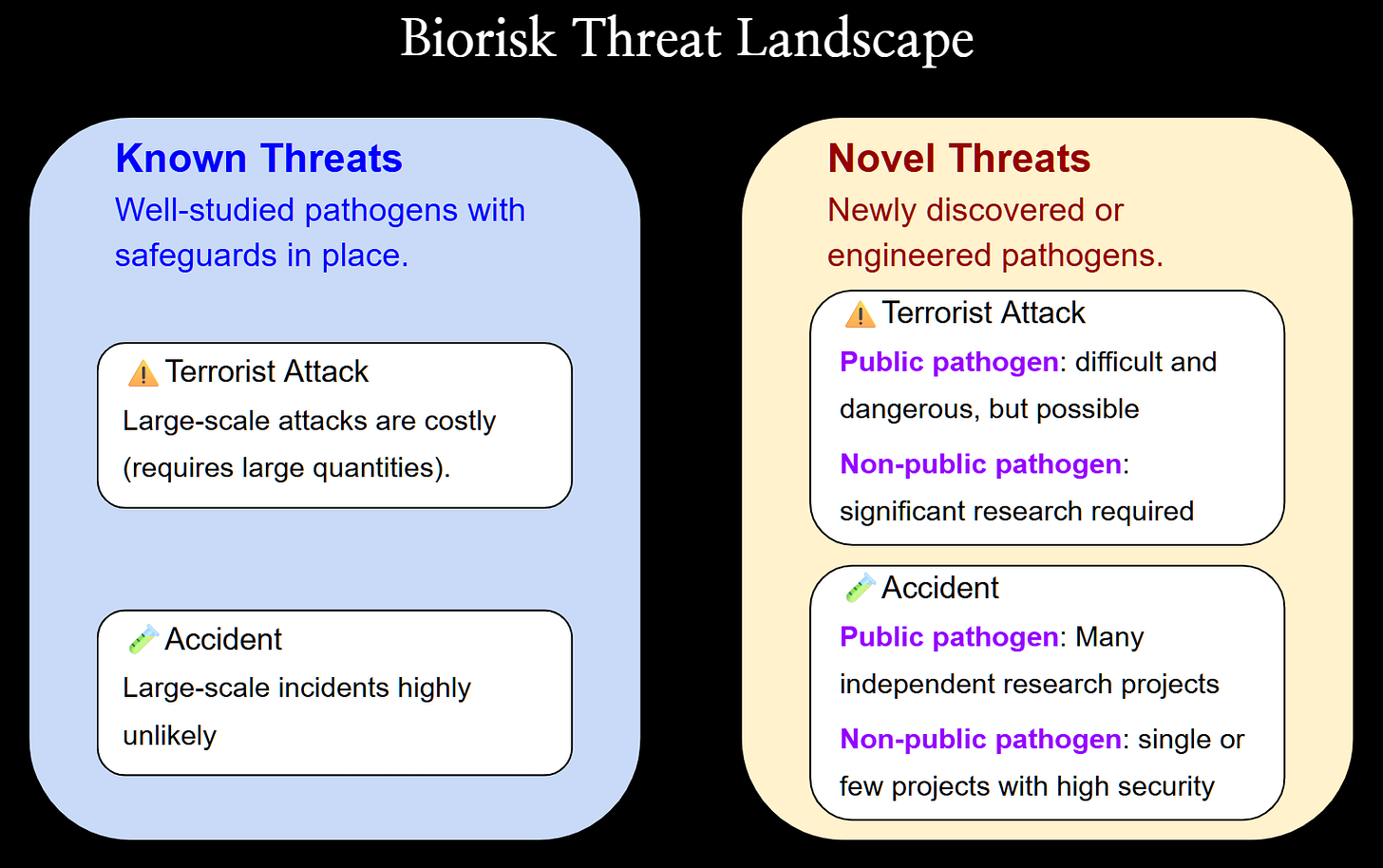

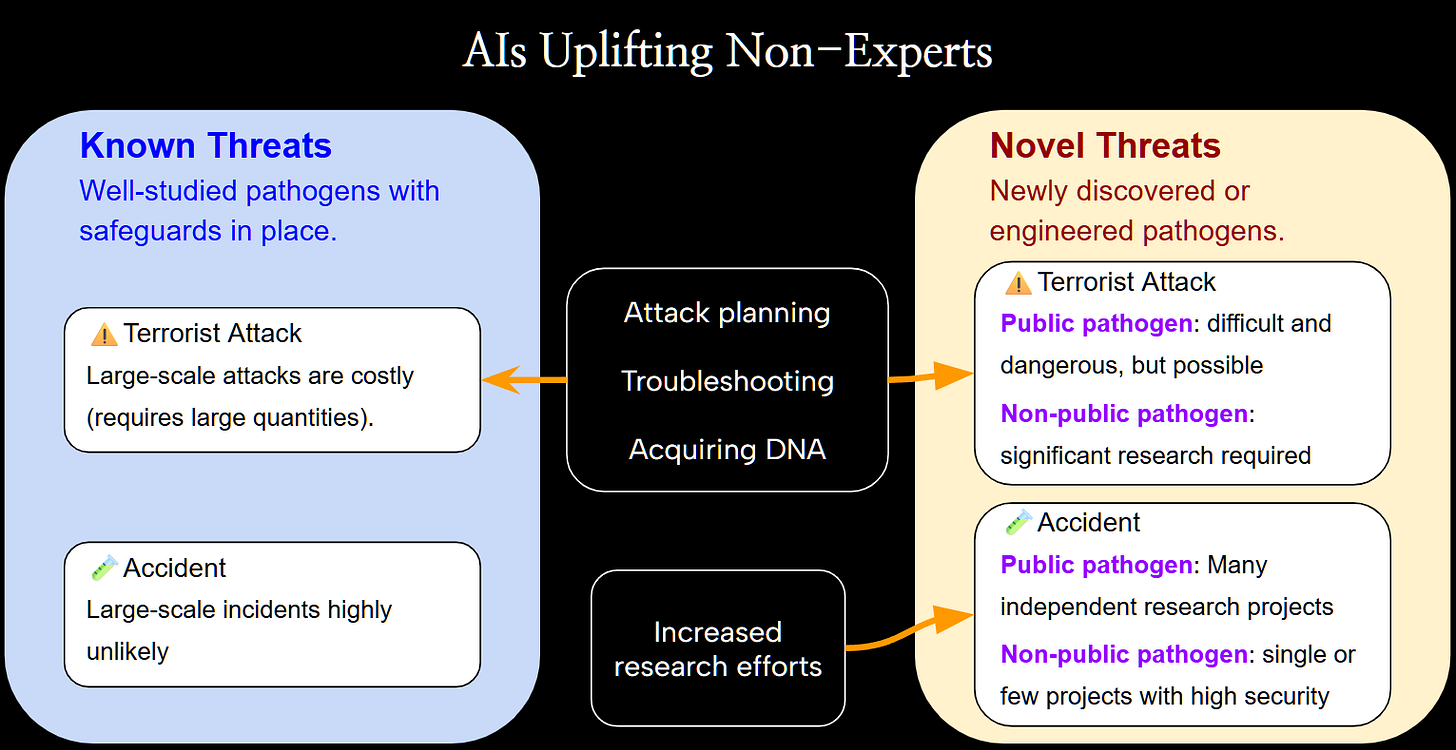

We can distinguish between two categories:

Known threats: pathogens that are already well-studied, with well-known safeguards and countermeasures.

Novel threats: newly discovered or engineered pathogens, capable of causing massive harm with little modification.

For known threats to cause a large catastrophe there would need to be a large quantity of the pathogen released strategically, where many can be affected. This makes it very expensive for terrorists, and accidents are very unlikely to kill more than a few people.

Novel threats are different. A public novel pathogen—one for which instructions to acquire or synthesize it are widely available—dramatically increases risks. Terrorists would find it far easier to exploit such information, rather than developing the pathogen themselves, and accidents would become more likely as more labs attempt related research. And unlike known threats, accidents with novel pathogens can cause extreme harm, if they involve pathogens capable of causing pandemics. It still appears uncertain whether Covid-19 was caused by a lab accident, for instance.

Now, we introduce AIs into the mix:

AI Uplifting Non-Experts

For terrorists, AI can reduce the expertise required to attempt an attack. They could:

Get help with attack planning.

Receive guidance on acquiring and preparing pathogens, such as pathogen candidate selection, troubleshooting lab work, or interpreting experiment results.

Use AI to circumvent DNA screening. DNA fragments are likely needed for a bioweapons program, and while most providers screen orders, AI might assist in evading these safeguards.

A secondary effect is that the AI capabilities may boost dangerous research, raising the chance of accidents or that a highly dangerous pathogen is made public.

How bad is it?

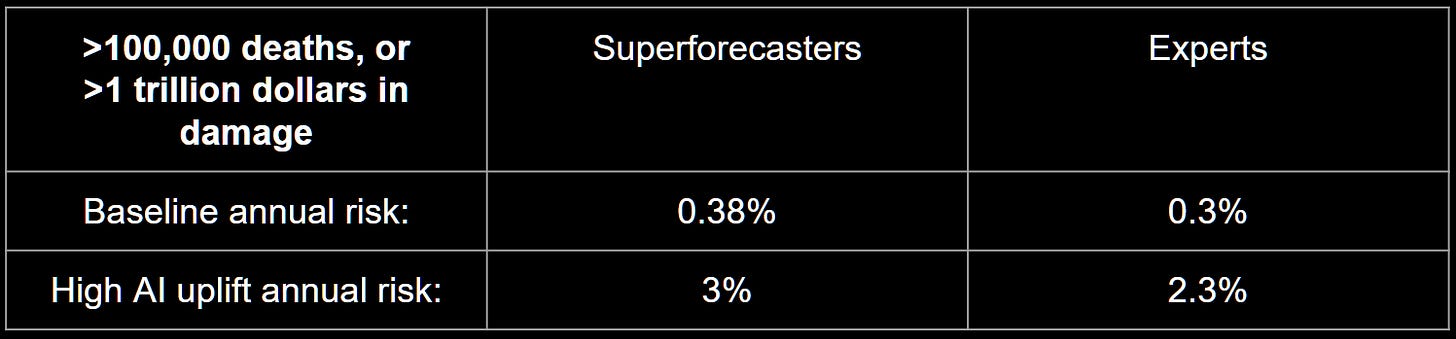

The Forecasting Research Institute (FRI) asked 22 superforecasters (with excellent track records) and 46 biology experts to estimate the annual risk that a human-caused epidemic kills more than 100,000 people or causes at least $1 trillion in damage.

Two scenarios are particularly relevant:

Baseline — AIs offer little to no help to terrorists.

High AI uplift — AIs provide substantial assistance:

10% of non-experts succeed at influenza synthesis with AI help.

AIs construct plausible attack plans.

Non-experts with AI achieve a 90% success rate at acquiring DNA fragments for an attack.

This table shows the median risk forecasts:

The danger would escalate further if proper mitigations are not put in place—or if a highly dangerous novel pathogen, capable of causing a pandemic, becomes public.

Timeline

So, how far off are these capabilities?

Influenza synthesis: Direct tests of non-expert uplift are lacking, but AIs already outperform experts in troubleshooting virology laboratory protocols.

Attack planning: Extremely unclear—AI system cards provide almost no detail here.

Screening evasion: Not very difficult even without AI. While DNA providers belonging to the International Gene Synthesis Consortium (IGSC) follow screening standards, many non-ISGC DNA providers do not have proper DNA screening. With IGSC companies representing around 80% of the global DNA synthesis market, we can guess that 10-20% of DNA orders are not properly screened.

Overall, it’s hard to judge exactly how dangerous current AI capabilities are.

When OpenAI released GPT-5, which is arguably the smartest AI to date, they stated: “we do not have definitive evidence that this model could meaningfully help a novice to create severe biological harm”. But notably, they didn’t claim confidence that it couldn’t help.

Today’s AIs are still somewhat unreliable. As far as I can tell, some practical laboratory skills and critical judgement remain necessary for bioweapons development. AIs can provide meaningful uplift, but probably not on the level specified for the highest risk forecast by the superforecasters and biology experts.

Like with rogue replication, I think the most realistic scenario is that biorisk capabilities become deeply concerning within months, perhaps half a year, and widely accessible sometime in 2026.

Now we come to the real reason I wanted to talk about all this. The issues I have described so far—rogue AIs, and AI-enabled biological catastrophes—are not the final dangers. They are steppingstones to much larger threats.

Existential Risk

Future AIs could be used to design bioweapons capable of wiping out humanity.

AI could replace humans across society—not just in labor, but also in decision-making and even in personal relationships—leading to widespread human disempowerment.

A totalitarian regime could gain a decisive military advantage through AI, gaining indefinite global control, with an army of robots suppressing any resistance.

And, perhaps most worrying of all, we could lose control of intelligences far surpass our own, ending up with uncontrolled superintelligence.

These are existential risks: threats that could permanently destroy humanity’s potential.

The smaller-scale threats we’ve discussed may actually serve as wake-up calls. They could push frontier AI companies to harden their security and improve their safety guardrails, prompt regulation ensuring access to frontier AIs are limited to trusted actors, and motivate the implementation of hardware verification regimes. They could highlight the need for international coordination, since many safety measures only work if implemented globally.

My hope is that these challenges can be turned into opportunities. Instead of a global pandemic, we could have global consensus on what AIs are safe enough to be developed and deployed. Instead of an ever-expanding population of rogue AIs, we could ensure that unverified AIs and adversarial actors do not have access to compute.

Unfortunately, far too few people are working on this.

About Working on These Issues

When I encounter some specific worry—Will AIs go rogue? Will terrorists use AIs to build weapons?—I’m often struck by how little serious work is being done.

If I want to have an idea of how many rogues there will be and what they might do, I have to figure it out myself. If I want to know how many terrorist groups may be capable of developing bioweapons with AI support, I have to personally investigate it. These are some of the most important questions of our time, why aren’t more people attempting to answer them?

The field of AI foresight is extremely neglected.

But this means that it’s actually not that hard to make a meaningful contribution—you reach the frontier of work on these topics simply by being one of the first people to engage seriously with these questions.

The situation is similar within many other important areas. There are few good suggestions on how to transition with minimal issues into an economy where most work is automated. There is too little discussion on what international governance frameworks are most likely to work. There are so many strategic questions that still need answering.

I believe that working on AI safety—trying to answer questions such as these, making the future of AI goes well—is the most important thing anyone could work on right now. It’s a great transition for humanity, and we’re embarrassingly unprepared.

If you’ve been even a little inspired to think about your role in this, let me remind you of the timelines: we may only have a few years before we reach AIs that match or surpass human intelligence. Anyone who wants to contribute will have to move quickly.

So let me end with a few concrete suggestions for what you can do:

Inform. Talk to others about what’s happening—both the risks and the potential.

Learn. Deepen your understanding of AI, the risks, and the key players. A great place to start is the AI 2027 scenario, which I think may be the single most informative thing you can read. You can also check out my blog, Forecasting AI Futures, or join the regular discussion meetups organized by AI Safety Gothenburg (if you’re in the area).

Take action. We are not powerless. If you want to get directly involved, visit aisafety.com, which collects resources on how you can contribute.

Thank you for listening!

Thank you for reading! If you found value in this post, would you consider supporting my work? Paid subscriptions allow me to continue working on this blog instead of seeking higher-paying (but less interesting) work. You can also support my work for free with an unpaid subscription, or through liking and sharing my posts!